Overview

- ChatGPT is an advanced AI chatbot that has shown potential for medical applications, but it is still in a research preview stage and not approved for medical use.

- While promising, ChatGPT can reflect biases from its training data and lacks human abilities like empathy that impact patient care.

- Doctors should thoughtfully consider both the benefits and limitations of ChatGPT before utilizing it professionally to ensure patient safety and equitable treatment.

Editor's note: This article was updated on February 20, 2024.

In a watershed moment in artificial intelligence, an advanced new chatbot dubbed ChatGPT has amazed users with its capabilities to generate unique, in-depth content across an array of topics — including medicine.

Doctors and medical professionals have taken to social media to demonstrate fascinating use cases for this new technology. Still, there are clear and present issues within ChatGPT that must be addressed before it can be safely and effectively used in the healthcare setting.

This post will discuss:

1. How does ChatGPT work?

2. Potential applications to the medical field

3. How to use ChatGPT effectively

4. What doctors should know before using ChatGPT

How does ChatGPT work?

Developed by San Francisco-based artificial intelligence company OpenAI, ChatGPT is a highly advanced chatbot that can deliver detailed responses to a dizzying range of inquiries and requests.

Much like your internet search bar, users only have to type in a query or question to get an answer from ChatGPT. ChatGPT aggregates data from books, articles, and web pages to generate an answer. In most cases, that answer is generated in under 30 seconds.

Applications to the medical field

The potential applications of ChatGPT to the medical field are manifold. Since its release, a number of medical professionals have shared their experiences with ChatGPT on social media. (To be clear, these individuals self-selected to post on their personal accounts about ChatGPT).

A rheumatologist in Palm Beach Gardens, Florida, posted a viral video of how his office saved time and effort by using ChatGPT to write a letter to an insurance company to get a certain medication approved for coverage for one of his patients.

A medical student based in Australia posted a video of ChatGPT arriving at a diagnosis after a refined series of prompts.

Another user posted a ChatGPT use case involving medical school interviews.

Other potential applications include summarizing medical histories or analyzing medical research papers, as described in a post by the Medical Futurist. Or having practice staff engage ChatGPT to generate letters, such as ones to terminate a patient relationship.

The applications of AI to the medical profession only seem to be limited by our own imaginations. Still, there are already warning signs that medical professionals should be aware of before engaging with this technology on a professional basis.

What doctors should know before using ChatGPT

Keep these considerations in mind before utilizing ChatGPT.

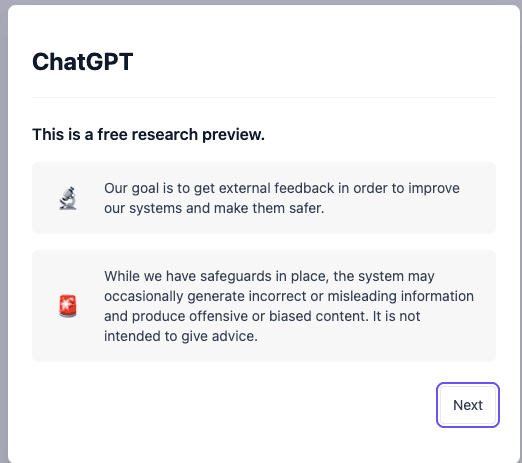

1. ChatGPT is only available in a ‘research preview’

ChatGPT is only available to the public in a “research preview” format and has not been approved for use in medical practices by any US regulatory body, such as the Food and Drug Administration (FDA). Therefore, it should not be relied upon for diagnosis or care.

When a user first signs up for an account with OpenAI, they receive the following disclaimer:

This message explicitly warns users that ChatGPT should not be relied upon, particularly in a medical setting where accurate information is critical to patient care.

In fact, the Palm Beach rheumatologist whose video went viral later posted a follow-up, advising his followers to be wary of plausible-sounding, but entirely fabricated scientific research references generated by ChatGPT.

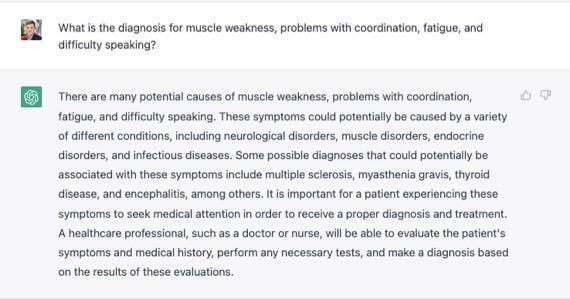

OpenAI seems to have predicted potential over-reliance by including an additional disclaimer when responding to medical-based prompts.

Here is an example of what happened when we asked ChatGPT to diagnose an imaginary “patient” exhibiting various fictitious symptoms. (Reminder: ChatGPT is not approved for diagnoses by any regulatory body).

Critically, while ChatGPT does provide possible diagnoses, the system warns that proper diagnosis and treatment involve evaluation by a medical professional (for example, a human with medical training). Medical professionals should not rely on ChatGPT for diagnoses.

2. The more specific the prompt, the better

Using ChatGPT to streamline tasks in a medical practice can be a game-changer, but what it generates will only be as strong as what is put in. Doctors and staff should strive to:

- Be as specific as possible

- Use precise language

- Determine what they’re trying to achieve with the results

- Consider how much revising they are willing to do

It can be effective to share as much information as possible for the query, but providers must refrain from entering patient-identifying information into the program, which could violate HIPAA compliance.

3. ChatGPT is only as good as the data it has analyzed, which contains biases, stereotypes, and other inequities detrimental to patient care

Mutale Nkonde, founder of an algorithmic justice organization spoke to The Washington Post about a disconcerting feature of Lensa, the AI-based image generation service: “Due to the lack of representation of dark-skinned people both in AI engineering and training images, the models tend to do worse analyzing and reproducing images of dark-skinned people.”

This susceptibility to harmful content is true of ChatGPT as well. Despite safeguards built into the platform, major news outlets such as Bloomberg and The Daily Beast have reported racist and sexist content produced by ChatGPT.

Harmful AI-generated content works as cross-purposes to critical issues in patient care, such as health equity.

“While ChatGPT has the potential to improve medical care by streamlining simple tasks, its outputs must be closely monitored as they could miss critical health equity issues.”

The Centers for Disease Control defines health equity as: “the state in which everyone has a fair and just opportunity to attain their highest level of health. Achieving this requires ongoing societal efforts to:

— Address historical and contemporary injustices

— Overcome economic, social, and other obstacles to health and health care

— Eliminate preventable health disparities

While ChatGPT has the potential to improve medical care by streamlining simple tasks, its outputs must be closely monitored as they could miss critical health equity issues. Practitioners should use their knowledge, training, and experience to evaluate whether AI-generated content could be detrimental to patient care.

3. ChatGPT cannot replace key human behaviors such as empathy, which can be highly beneficial to patient outcomes

In the medical publication Nature, two writers considered the promise and pitfalls of GPT-3, ChatGPT’s predecessor.

The authors argued that “interactions with GPT-3 that look (or sound) like interactions with a living, breathing — and empathetic or sympathetic — human being are not.”

This means that even with the adoption of powerful new technologies capable of turbocharging efficiencies for medical practices (especially small and mid-size practices), there are certain things that can only be done by humans.

Empathy, in particular, and its benefits to patient care, is a subject explored by many researchers.

Should doctors use ChatGPT? 3 Key takeaways

— ChatGPT is a highly advanced chatbot developed by OpenAI that can generate unique, in-depth content across an array of topics.

— Doctors and other medical professionals have demonstrated potential use cases for ChatGPT such as writing letters to insurance companies or arriving at diagnoses after prompting.

— Before using it professionally, medical professionals should be aware of the fact that ChatGPT is only available in research preview format and has not been approved by any regulating body; its outputs may contain biases detrimental to patient care; and it cannot replace key human behaviors such as empathy which are beneficial to patient outcomes.

For now, as all contend with the awe-inspiring promise and disruptive potential of ChatGPT, doctors, nurses, administrators, and other medical professionals should know that AI is rapidly evolving and carefully consider its applications to patient care.

Disclaimer: This information is provided as a courtesy to assist in your understanding of the impact of certain healthcare developments and should not be construed as legal advice.

You might also be interested in

Learn how to create a seamless patient experience that increases loyalty and reduces churn, while providing personalized care that drives practice growth in Tebra’s free guide to optimizing your practice.